By Bennett Sherry

“Artificial intelligence has a long history, dating back to the 1950s when early experiments aimed to create computer programs that could learn and reason like humans. Over the decades, AI has made significant strides, revolutionizing industries from healthcare to finance. Today, AI is a ubiquitous part of our lives, powering virtual assistants, self-driving cars, and more. Understanding the history of AI is crucial for educators looking to prepare students for a world that is increasingly driven by intelligent machines.”

That was written by a machine.

If you’re like me, the idea of artificial intelligence (AI) in education probably has you looking over your shoulder. The robots are coming for our jobs! There’s no place for humanists in a future ruled by programmers! (Until the sentient Roombas come for them, too). Pretty soon, I’ll be ready to throw my Crocs into the cogs of DALL-E.

Is all the anxiety well-founded? Maybe.

There is danger here, but there are possibilities, too. The tools that have emerged in the past year are powerful and flexible. AI offers great promise, such as improved access to healthcare and education. AI has the potential to transform our classrooms in ways that computers have so far failed to do. While AI is impressive, it should not—and cannot—replace the work that teachers do. Students need teachers now more than ever to understand what sources to trust and how to evaluate the claims they encounter.

I have more examples below, but let’s begin by looking at the AI-generated paragraph above, which was written in response to the prompt, “Write a 50-word blog post for world history teachers about the history of artificial intelligence.” For starters, it’s 79 words long (but let’s not be too critical—this blog had an 800-word “limit”). Let’s dig deeper. I doubt many Big History or World History Project teachers would consider the 1950s a “long history.”

A natural history of artificial intelligence

Ancient history and myth are littered with dreams of thinking machines. Over 2,700 years ago, Homer’s Odyssey told how the Greek god Hephaestus created automatons, like the golden women who attended him in his forge. Buddhist legends include similar stories of animate statues guarding the Buddha’s relics. Chinese chronicles feature stories of machines we would now call robots. In Jewish tradition, there are stories of golems—animate clay figures who come to life when certain holy words are placed inside them. Al-Jaziri, the prolific inventor of the Islamic Golden Age, designed many different automata in The Book of Knowledge of Ingenious Mechanical Devices.

An illustration of what some scholars claim to be four programmable automata musicians that floated on a boat to entertain guests. Freer Gallery of Art Collection, Smithsonian National Museum of Asian Art, CC0 1.0.

An illustration of what some scholars claim to be four programmable automata musicians that floated on a boat to entertain guests. Freer Gallery of Art Collection, Smithsonian National Museum of Asian Art, CC0 1.0.

Other stories, concepts, and alchemical experiments continued to imagine autonomous beings into the modern period. That said, we don’t need to go too far into the past to understand the potential future of AI in the classroom. Still, we should go back a bit further than the 1950s, to the most transformative education technology in history.

“Among the greatest benefactors of mankind”

In 2000, Professor Steven D. Krause wrote about the most transformative educational technology in the history of humanity: the chalkboard. Not computers. Not the television or radio. The big piece of wood we scratch with chalk. Chalkboards fundamentally transformed how teachers interacted with students by permitting large-group instruction.[1]

Krause used the example to reflect on the potential of computers in classrooms. Today, we rarely think of the blackboard as “tech,” but in the mid-nineteenth century it was revolutionary, with one scholar claiming it “deserves to be ranked among the best contributors to learning and science, if not among the greatest benefactors of mankind.” Now, two centuries after the invention of the blackboard and 23 years after Krause first published his article, computers are more common, but they still haven’t had the revolutionary effects of the chalkboard. Will AI technologies help computers finally reach their educational potential?

We don’t need AI to teach. Just like we don’t need computers. Or blackboards. But they sure help. Especially if they—as Krause puts it—“enhance what teachers are already doing,” and if the makers of new technologies can “understand the realities of the classroom and enlist teachers as collaborators rather than regarding them as obstacles to progress.”

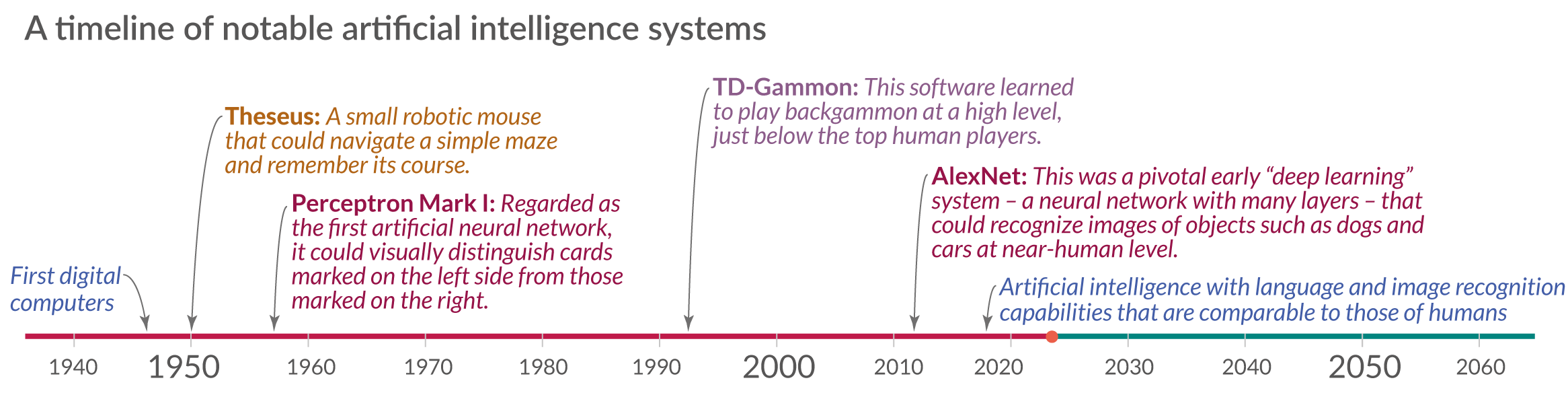

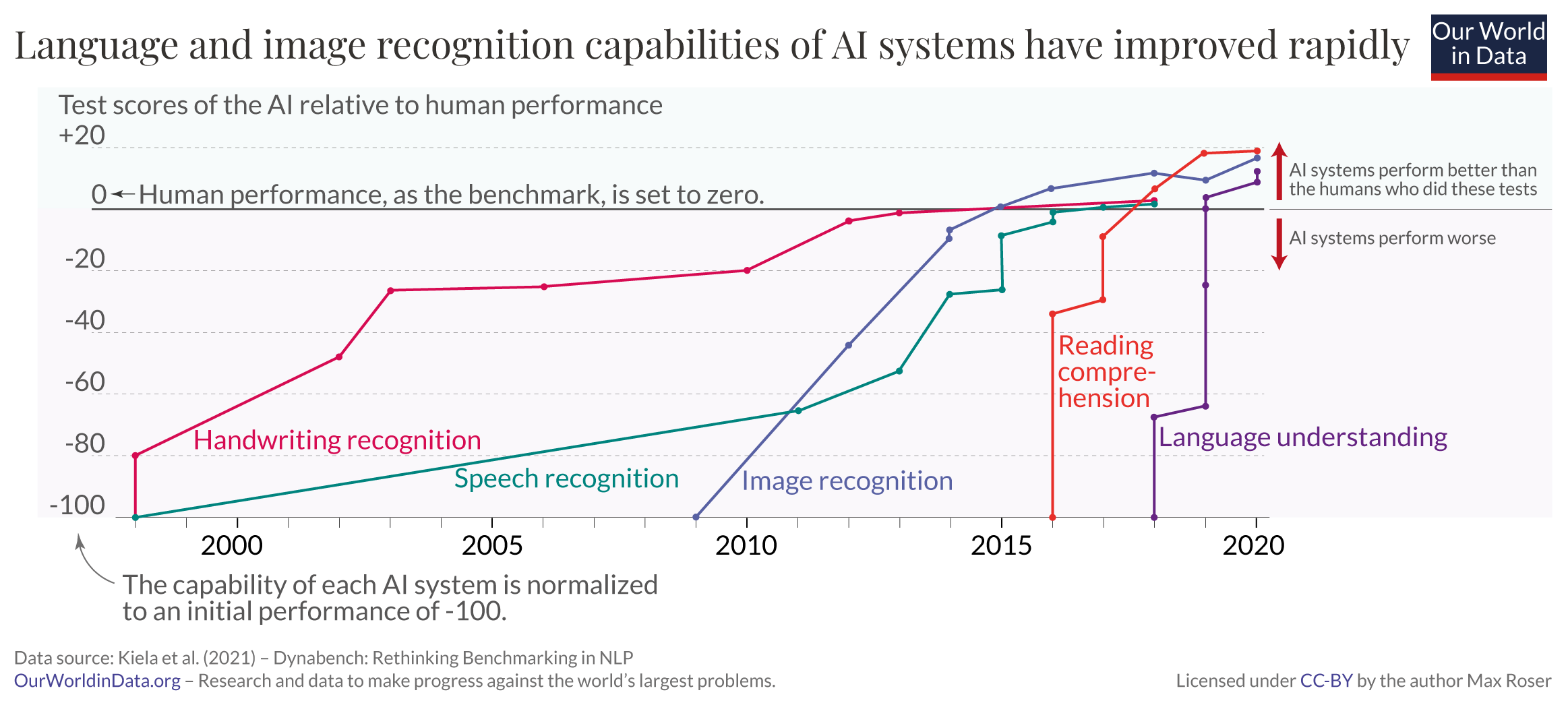

Timelines of AI development and its performance against humans in controlled tests. By OWiD, CC-BY.

Timelines of AI development and its performance against humans in controlled tests. By OWiD, CC-BY.

To err is human…and so is AI

We can’t simply trust AI to get the history right. And if AI is going to be useful in the history classroom, it needs to enhance what teachers are already doing. Well, one of the things that OER Project teachers are already doing is teaching students how to source and claim test, which will enable them to evaluate the evidence they encounter.

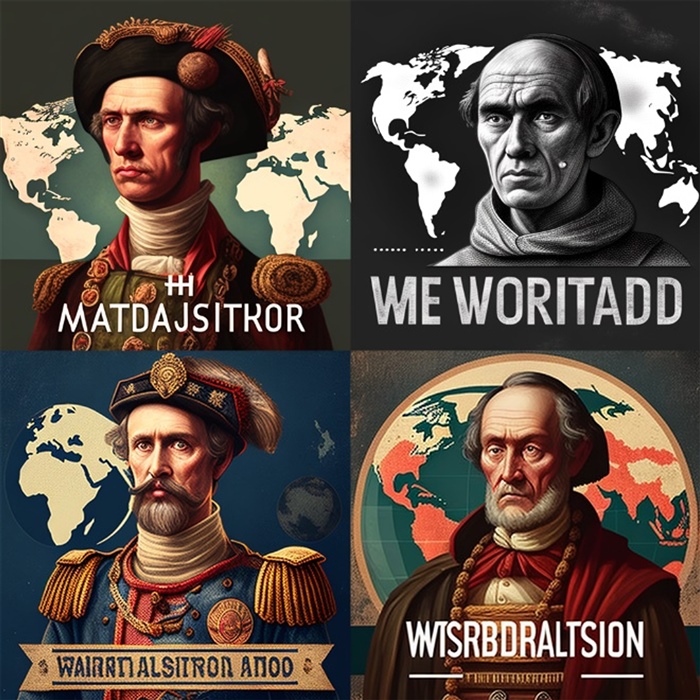

AI is an intriguing and powerful tool, but it’s made by humans and trained on our libraries—and not just the good stuff. For example, I’m obsessed with the AI art generator Midjourney. It’s some seriously cool stuff. When I started thinking about this blog, I threw some prompts at it. Here’s what it spit out when I asked it to “/imagine a world history teacher”:

Hmm. OK. Well, I can see how that prompt might be confusing. The eagle-eyed among you probably also noticed that these four people share a couple of things in common. I decided to make things simpler, and change the prompt to “/imagine a teacher”:

Lots of chalkboards, not much diversity. This sparked another idea for a prompt: “/imagine an American politician”:

I’m detecting a pattern.

Please understand, this was not a scientific analysis. I didn’t conduct extensive testing. I’m not an expert in AI prompt writing. But my humanities training did prompt me to ask the question: Why are all these “people” white, and why are most of them men? Thankfully, there’s plenty of research on this problem, lots of it written by people who approached the topic with diligence.

Ideas surrounding race and gender are embedded in our cultures. These constantly shifting cultural landscapes aren’t easy to quantify and program. We need humans to navigate the intricacies and subtleties. AI is trained to make predictions based on what it has “learned,” and therefore it will mirror what’s in those training materials—in most cases, a library of texts and images. And in the billions of texts and images in the library of humanity, plenty are racist and sexist. As we begin to rely on these systems for things like healthcare and education, we need to be asking tough questions about how systems are trained. We also need to ask about the human labor doing the training. For example, according to TIME, OpenAI hired Kenyan workers and paid them $2 per hour to train ChatGPT to be less toxic.

We need to be aware of the pitfalls and consider the role that teachers will play in helping students understand them. But the future of AI in education also presents us with many opportunities to improve access and equity in education.

AI testers

Teachers have a critical role to play in any positive AI future. Students need to learn to evaluate sources produced by AI—both how to identify them as artificial and how to locate flaws. Otherwise, we’ll be using a revolutionary tool to reinforce existing inequalities and injustices.

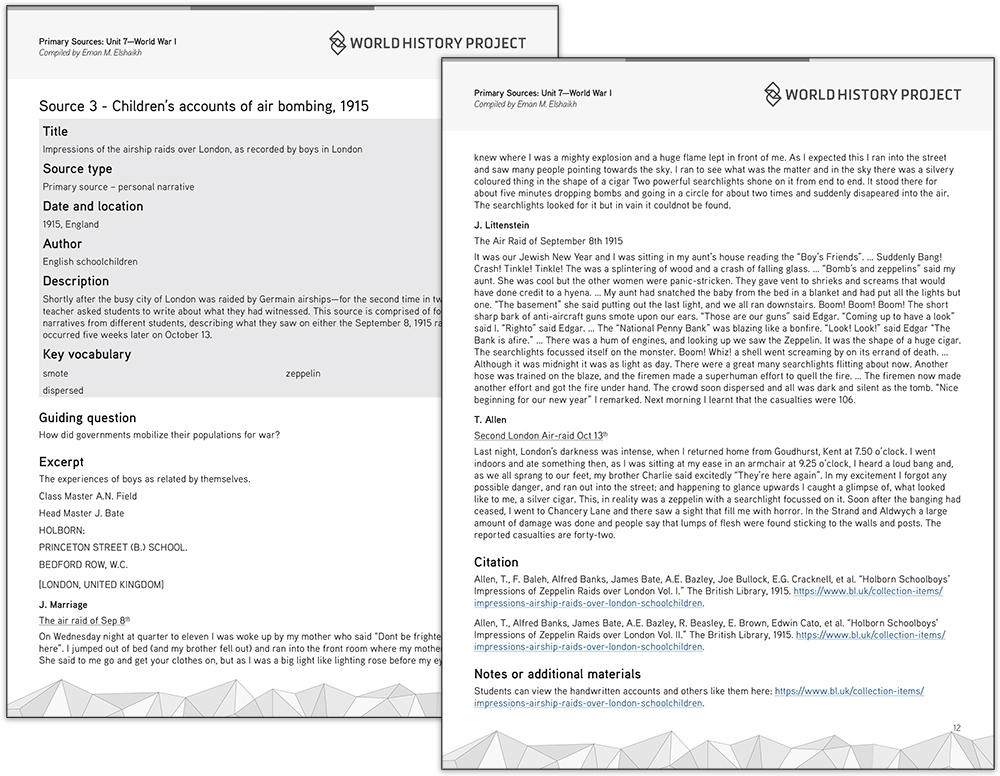

AI has the potential to enhance what you’re already doing in your BHP or WHP classrooms. One small example: You could do a quick sourcing activity by extending WHP’s World War I primary source collection.

In that collection, there are three letters written by boys in London in 1915, in which they describe their experiences during German airship raids. If you add a fourth source, such as the one written below by ChatGPT, can your students identify the letter written by AI? What evidence clued them in? What tripped them up? Maybe it’s the language used or that the narrative sounds generic compared to the other excerpts. The possibilities here are endless—share your ideas in the comments section below!

As the sirens blared through the streets, we knew that another German air raid was underway. I rushed outside with my family and neighbors, and we looked up to see the enemy airships hovering above us. The searchlights illuminated the sky, and we could see the bombs falling from the airships, streaking towards the ground. I remember feeling a sense of dread and helplessness, as we watched the bombs hit their targets, and the flames and smoke rose into the sky. We knew that people’s homes and businesses were being destroyed, and we feared for their safety. As the raid continued, we could hear the sound of anti-aircraft guns firing from nearby batteries, and we saw the tracer bullets streaking through the sky. The air was filled with the sounds of explosions, gunfire, and the roar of the airships’ engines. Despite the chaos and destruction around us, I also remember feeling a sense of defiance and determination. We were not going to let the enemy break our spirit or our resolve. We knew that we had to do our part to defend our country and our way of life.

We’re all anxious about a future where AI teaches our students. AI is a potentially powerful classroom tool, but it can’t replace teachers. Like any tool, students need to learn how to use AI and how to evaluate what it produces. Students need tools like the claim testers to evaluate the stuff they encounter online. How do you calculate the authority of a text written by AI? How do you find verifiable evidence that something was written by a human? Students need great teachers helping them navigate questions like these. After all, the chalkboard transformed the classroom, but it couldn’t hold the chalk.

[1] Thanks to Professor Bob Bain for this source.

About the author: Bennett Sherry holds a PhD in history from the University of Pittsburgh and has undergraduate teaching experience in world history, human rights, and the Middle East. Bennett writes about refugees and international organizations in the twentieth century and is one of the historians working on the OER Project courses.

Cover image: Created using Midjourney (/imagine style of Vincent van Gogh, an army of robots marching) Creative Commons Noncommercial 4.0 Attribution International License.

For full access to all OER Project resources AND our amazing teacher community,

For full access to all OER Project resources AND our amazing teacher community,

Top Comments